What is Technical SEO?

Technical SEO focuses on optimizing your website to achieve higher organic rankings. It covers indexing, crawling, rendering, page speed, site structure, migrations, core web vitals, and more. The ultimate goal of technical SEO is to optimize your site’s architecture to make it easier to find.

You might have the best website and content, but you will find ranking impossible with messed-up technical SEO. Thus, we’ve curated a list of common technical SEO issues you will likely face while on your journey to rank on the first page.

Here is the list of Top Technical SEO Issues

1. No HTTPS Security

Your site is insecure if you see a grey or red background and a “not secure” warning when you type your domain name. No one wants to enter an insecure web page and risk their data and privacy. This warning might urge your users to run back to the SERP and make it hard for you to rank.

You don’t want to ward off your users as soon as they enter your site. But this is likely to happen if you do not have HTTPS security. HTTPS security is paramount to making your users feel at ease while navigating your website.

HTTPS Secure Site Gains the Trust of The Users by Ensuring

- Data encryption

- Data integrity

- Privacy

- Authenticity

- Secure networks

2. Pages Unable to Index

Type your site URL into the Google search bar to find which pages rank. Are you able to find yourself?

If your answer is no, it’s time to dig deeper into the problem. The chances are that there might be some issues with your indexation.

Sometimes the page appears so low in search results that many assume it is not on Google. In reality, it is your technical SEO that is the real culprit. If your pages aren’t indexed, they are as good as not existing – and they will certainly not rank on the search engines either.

3. Duplicate Content

Do you know what users and Google love? Unique content.

According to SEMRush research, 50% of analyzed websites face duplicate content issues. If you’re trying to rank first on search engine results, duplicate content is a huge problem.

Programmed to make the web a better place for users, search engines intend to promote valuable, unique content with a low similarity index.

You can use tools such as SEMRush, Screaming Frog, or Deep Crawl to find out if you have content with a high similarity index. These tools will crawl your site to find out if your content has been re-posted anywhere else on the internet.

4. No Meta Description

A meta description is a concise snippet that summarizes what your page is all about. It helps web users decide whether to visit your site or not.

Aim to write a Meta of no more than 150 words. Staying within the word limit will ensure that your whole meta is visible in the SERP. When the users read the meta, they know if the site can answer their query and enter accordingly.

Here is a screenshot of the meta description from our website.

A quality description within the character limit can impact the number of people who click through your site. This can raise your traffic and engagement, improving your rankings in the SERP.

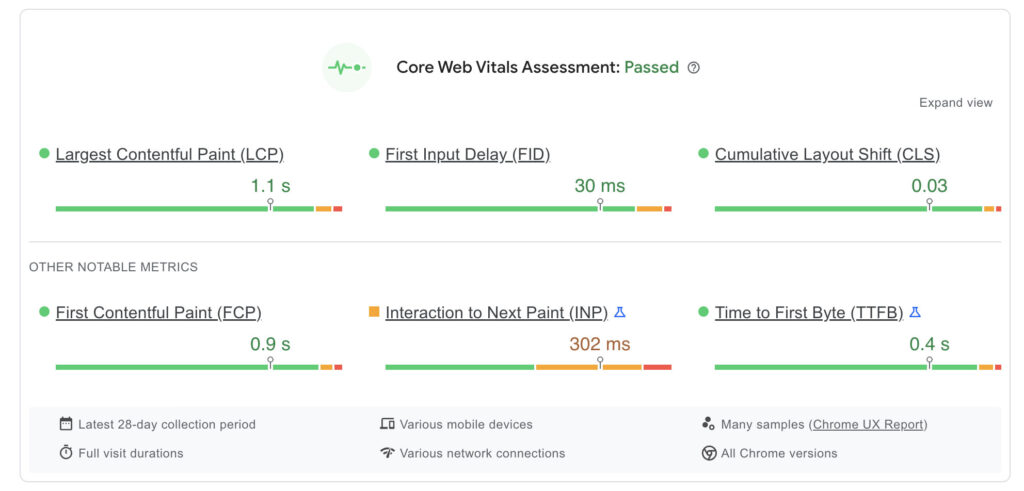

5. Slow Website Speed

No one likes waiting. Your website loading speed matters – to your users and Google.

Server response time is critical for a good user experience and for achieving good search rankings. Websites that are slow to load are penalized, which causes them to slide down the rankings.

If the page loading speed is more than two to three seconds, Google reduces the number of crawlers sent to your website. This means fewer pages get indexed, and you lose the potential of that page ranking on SERP.

To rank higher on the search engine, ensure that your page loads the most important HTML content quickly.

You can use Google PageSpeed Insights to identify your page speed problems and resolve them if you can.

Here is a screenshot taken from the Google page speed insights tool.

6. Broken Images

Broken Images can severely hurt your rank.

Nothing annoys people more than not getting what they came for. No one will continue navigating through a website where they see a broken image instead of a proper one. Your users will likely bounce away from your site if they have a terrible user experience.

The bots, too, will divert traffic from your site to your competitors’ sites. This will negatively affect your authority and rankings in the SERP.

Don’t let such easily preventable problems chase away potential customers and slip your page ranking.

7. Poor Mobile Experience

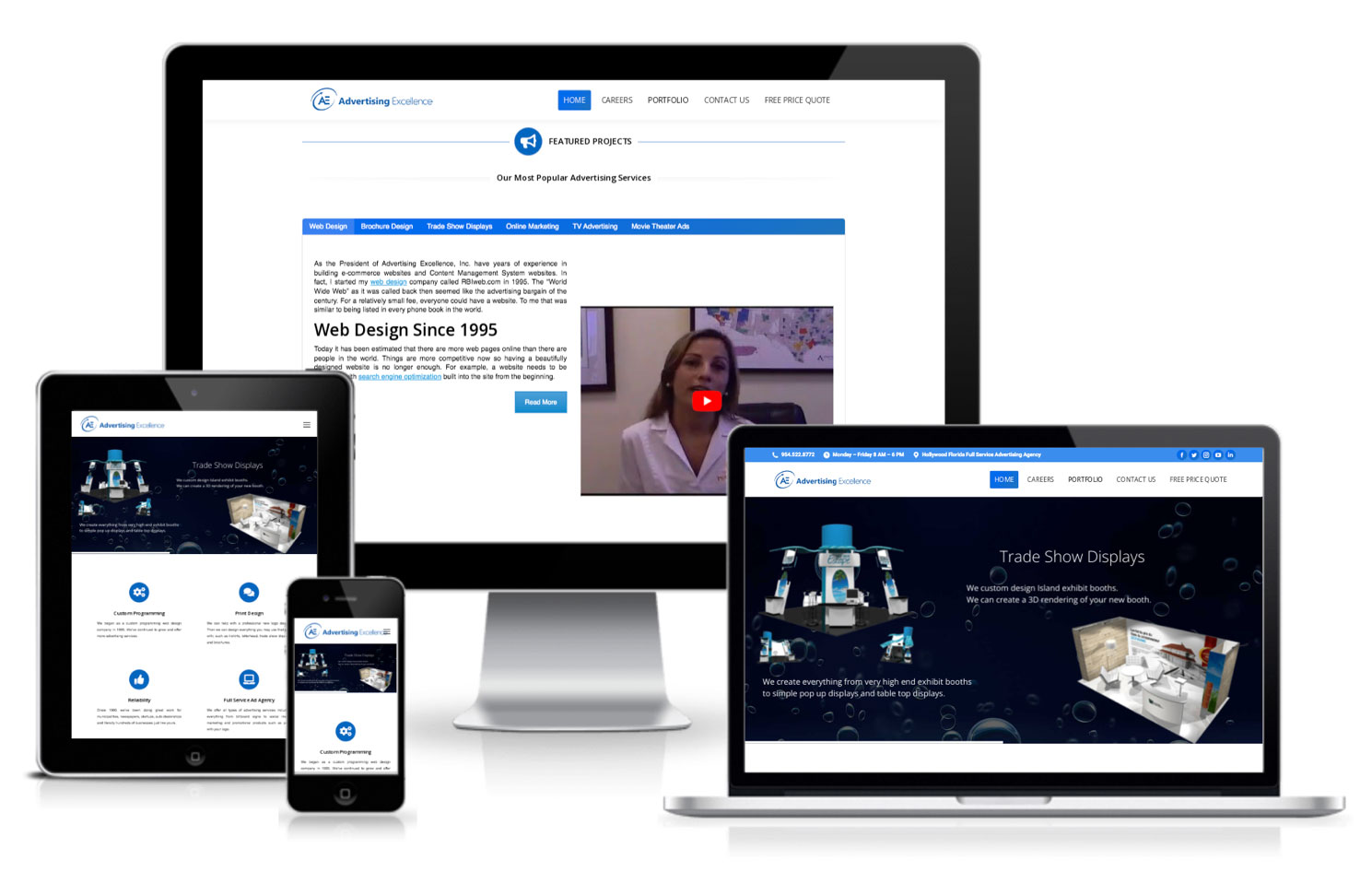

Mobile usage all across the globe is at an all-time high. Most people prefer browsing over their smartphones or tablets rather than on their desktops. Search engine Google also ranks websites based on their mobile version. Having a mobile-friendly website is more critical than it has ever been before.

See how well we optimized our website for all devices.

A website that is not mobile-ready can instantly push the users to click away. It can negatively impact your page ranking by upping your site’s bounce rate.

Consider This-

There were over 294.15 million smartphone users in the U.S. alone.

Around 40% of web visitors will skip your website if it’s not mobile-friendly.

8. Broken Links

For an exceptional SERP ranking, it is crucial to include internal and external links. These links on your website prove to the search engine crawlers and visitors that you have high-quality content. This invites more engagement which ups your authority.

However, broken links within your website can affect the ranking of your page. Over time, links can break for several reasons, such as if the external link page no longer exists.

Ensure you perform routine site audits to check for broken or outdated links.

You can use online broken link checker tools to check for broken links.

9. XML Sitemaps

An XML sitemap is like a website dictionary that lists the site’s important pages. It ensures that Google can find and crawl them all. An XML sitemap helps Google search bots understand what your website is about so that they can do their job effectively and efficiently.

Additionally, it aids search engines in understanding your website infrastructure, making your page’s ranking easier.

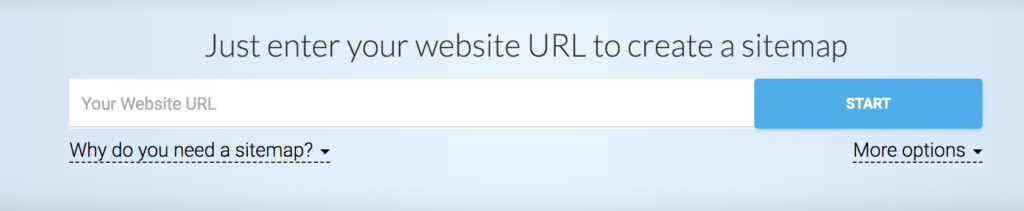

You can use sitemap generator tools to generate sitemaps.

If you do not have an XML sitemap for your website, you might see error 404. It will also make your content less discoverable, and your SERP ranking will fall.

10. Navigation Issues

Poor user experience is one of the biggest causes of bad SERP ranking. If the users who hop on your site find it challenging to navigate through, they will never return.

Even if you have the best content, your ranking will fall if your website is not user-friendly. Poor navigation can be a significant cause of less engagement and low authority. And low authority is terrible news for your website since it means less visibility online.

Designed to show the users what they are looking for; remember that Search engines are also businesses.

With an easily navigational website, you can be sure to be in Google’s good books,” says Neil Rollins from Haitna.

11. Incorrect Language Detection

It’s a global market, and audience from all over the world is using the web. That is why language declarations become essential. It helps the search engine detect the language and serve the users better. It is crucial to declare the language on the page in the HTML tag.

When you copy HTML content from another site, the HTML tag in your content might specify a language different from the one you are using. This will make you miss out on potential audiences who would have otherwise visited your site and drastically pull down your ranking.

12. Robots.txt

Do you know that a simple “/” can harm your page ranking on SERP? Probably not.

Improperly placed “/” in the robots.txt file can be considered one of the most damaging characters in all SEO. It is a big reason your site’s organic traffic is ruined and not indexed by search engines.

Spiders read the robots.txt file to determine whether they can index the URLs featured on that site. So, robots.txt acts like a rulebook for crawling a website. And if you are missing that, your indexability and crawlability may go for a toss!

What you can do-

Go to yoursitename.com/robots.txt and ensure it doesn’t show “User-agent: * Disallow: /.”

Key Takeaways

- Avoid technical SEO mistakes, which can severely damage your authenticity and ranking.

- Ensure your site has HTTPS security, which puts the users at ease.

- Concentrate on creating unique content with a low similarity index.

- Ensure your website loading speed is less than three seconds.

- Have a mobile-friendly website.

- Focus on continually checking for broken links and updating information on your site.

- Optimize your website to make it more crawlable and indexable.

- Having a user-friendly website can up your game like no other.